Processing NPI test data with aws Amazon Machine Learning

by Tanja Nyberg

Posted on August 20, 2018 at 10:00 PM

I was very excited to make my final boot camp project in the field Machine Learning. Unfortunately for me I was out of town when people joined teams and worked together I decided to check out an off-the-shelf product. I decided to use a data set for results of Raskin&Terry “Narcissism Personality Inventory” test, which I found Open psychology ,a full accessible data website. The test consists from about 11,624 individuals answered for 40 questions of the test. I got the data set (dataset.00) a *.scv file ( click hier or on the Process map button on the bottom of the page to get the process map with downloadable files ), the right size for my experiments I separated the data for two parts. One for creating a ML model and other one for the creating predictions and final comparison with the actual answers. The average score of the NPI test is about 13, 20 years it was just 11. So the more narcissistic individuals live among us the more important can be this test.

What model should I use? I found descriptions of four services:

- aws Amazon (Machine Learning and SageMaker);

- Microsoft Azure;

- Google (Prediction API and Cloud Machine Learning Engine);

- IBM Watson Machine Learning Studio;

I put my eye on Machine Learning from Amazon aws. They got my attention with their intro. You can choose what you want to do and there are many tutorials to show you how to do it. They offer so many services, some of them have goofy names, which actually is a very good way to remember. You will need to invest time to learn navigation on the starting page, as there is too much to learn, without a good understanding. The Machine Learning tutorial with a working data sample was sufficient and pretty well tailored.

As I could guess aws requires to store the data only on aws storage facilities- S3 or Amazon Redshift, data warehouse with querying capabilities over structured data. aws suggests four ways to get access to the learning modules:

- Amazon ML console;

- AWS CLI;

- Amazon ML API;

- AWS SDKs;

For using the 3rd method (Amazon ML API) I found two python codes on GitHub:

- To build up a ML model;

- To use this model for batch predictions;

The codes were written for Python 2, so they were adapted for Python 3. To write Python codes for aws Amazon you need the library called Boto3 which is not much complicated.

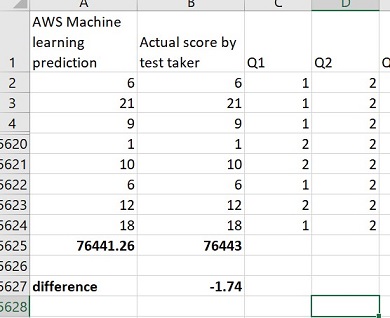

The result was astonishing. Almost all scores were predicted correctly, the total difference of more than 5,000 results was less than 2!

What I didn’t like about aws? The cost optimization system, which is almost non-existent. It is not in the scope of this article to explain that plan. Forecast and budget are not equal to each other and combining them all together under one term, is an old car salesman tactic.

The system which is possible to plan, to forecast and to budget the cost of use of their models, should make it possible to predict your cost using a comprehensive cost plan. What does AWS offer? It is more like cost monitoring - you can put limits, which doesn’t make much sense, if you are using real-time